Learning Scribbles for Dense Depth: Weakly-Supervised Single Underwater Image Depth Estimation Boosted by Multi-Task Learning

Kunqian Li1, Xiya Wang1, Wenjie Liu1, QI QI2, Guojia Hou3, Zhiguo Zhang4, Kun Sun5

1College of Engineering, Ocean University of China

2College of Computer Science and Technology, Ocean University of China

3College of Computer Science and Technology, Qingdao University

4College of Electrical Engineering and Automation, Shandong University of Science and Technology

5School of Computer Science, China University of Geosciences

Abstract

Estimating depth from a single underwater image is one of the main tasks of underwater visual perception. However, data-driven underwater depth estimation methods have long been difficult to make breakthroughs due to the difficulty of obtaining a large number of true-value references. This is partly due to the high cost of acquisition equipment, which is difficult to be applied to diverse ocean scenes by a wide range of users, and therefore sample diversity is difficult to guarantee; on the other hand, manual annotation of dense depth relationships is almost impossible to achieve. In this paper, we establish a new underwater depth estimation benchmark, namely SUIM-SDA, by extending the SUIM dataset with more than 6000 manually annotated depth trendlines and 25 million depth-ranked pixel pairs. Using the sparse depth relation annotation provided by SUIM-SDA and the semantic information provided by SUIM, we design a new multi-stage multi-task learning framework to predict dense relative depth map for single underwater image. Comprehensive comparison and ablation study on the publicly available dataset and our new benchmark demonstrate the effectiveness of the proposed weakly-supervised strategy for dense relative depth estimation. The new benchmark, source code and pre-train models are both available at the project home page: https://wangxy97.github.io/WsUIDNet.

[Paper] [Code] [DataSet]

Highlights

-

To facilitate research on underwater image depth estimation, we establish a new benchmark, i.e., SUIM-SDA, by extending the Segmentation of Underwater IMagery (SUIM) dataset with Sparse Depth Annotation (SDA). SUIM-SDA is the first large-scale underwater image depth estimation benchmark, containing a total of 1596 images, more than 6,000 depth trendlines and 25 million point pairs with relative depth labels.

-

We design three adaptive generation strategies for depth-ranked samples based on depth trendlines. Diverse sam- ples with depth-rank labels can be generated efficiently at low annotation cost, thus facilitating a weakly supervised learning strategy for dense depth maps with depth trendlines as supervised information.

-

We design a weakly-supervised and two-stage multi-task knowledge distillation framework for single underwater image depth estimation, namely WsUID-Net, which learns from sparse depth annotation to predict dense relative depth maps. Extensive experiments and ablation studies on the widely used SQUID and our SUIM- SDA benchmarks have verified the advantages of our approach.

Overall Architecture

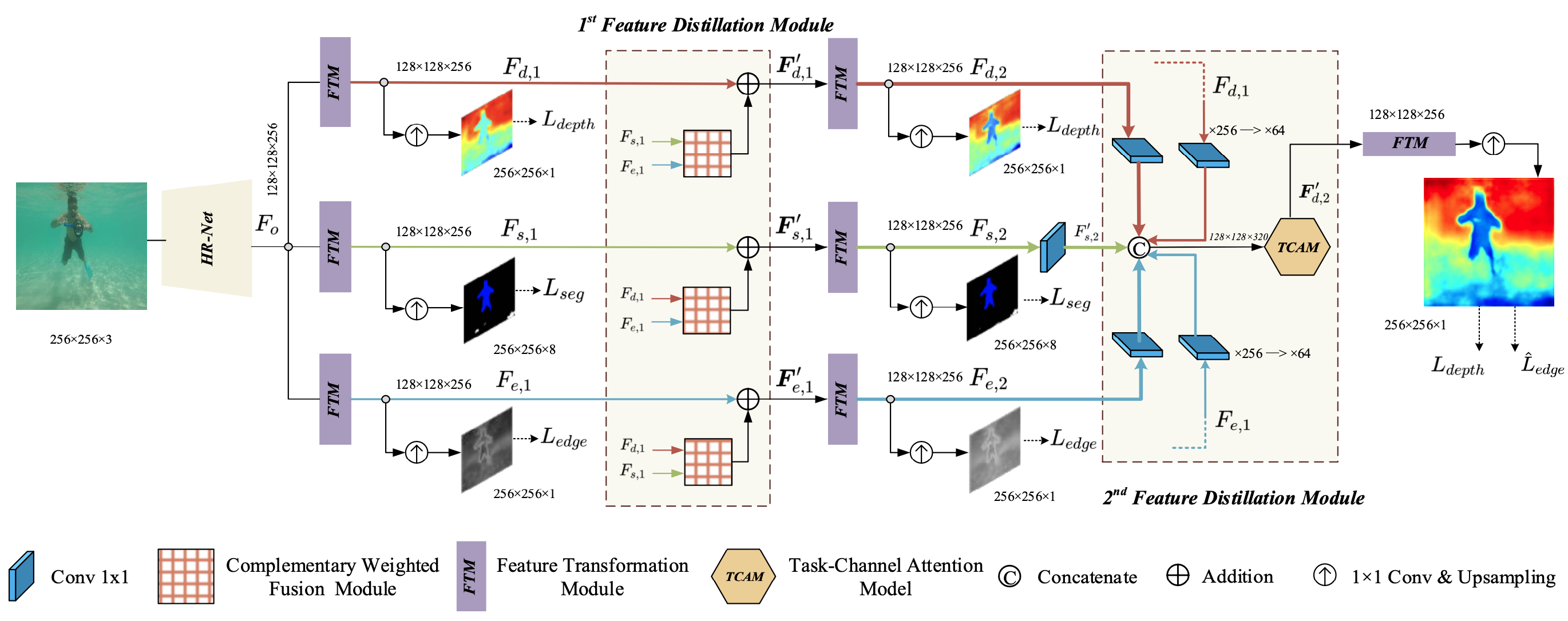

Fig 1. The overall architecture of the proposed WsUID-Net. WsUID-Net consists of three branches which are used for depth estimation, semantic segmentation and edge detection respectively, thus implementing a weakly-supervised depth estimation learning framework with the auxiliary supervision of association tasks. In particular, we design a two-stage multi-task feature fusion and distillation strategy, where the task-channel attention model (TCAM) and the proposed complementary weighted fusion module (CWF) are oriented to channel fusion and spatial fusion, respectively.

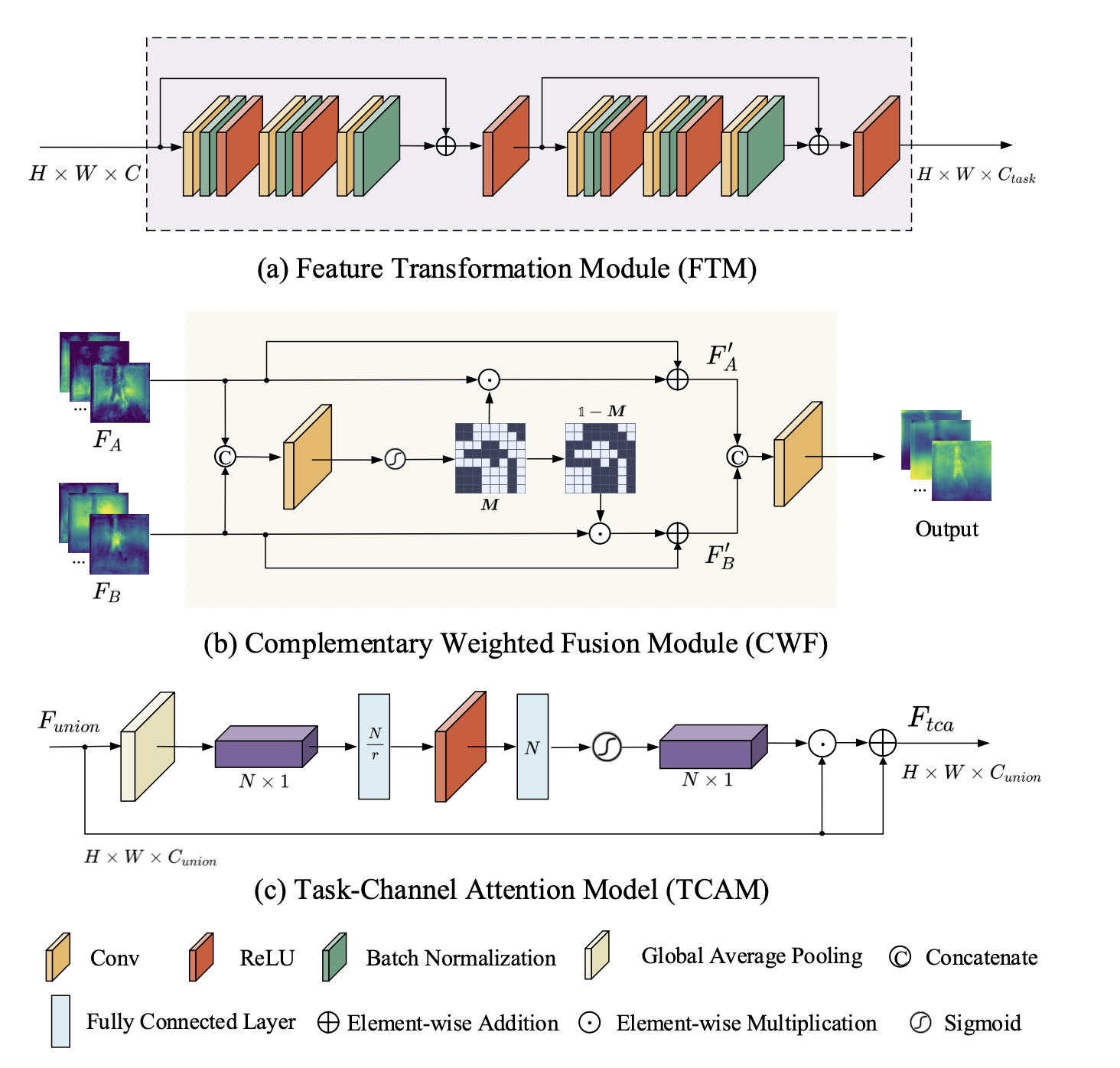

The detailed structures of the key modules of WsUID-Net. (a) the feature transformation module (FTM) for different tasks; (b) the weighted fusion module (WFM) which is used in the first-stage feature distillation, to fuse the features of two different tasks; (c) the task-channel attention module (TCAM) for the second-stage feature distillation.

Results

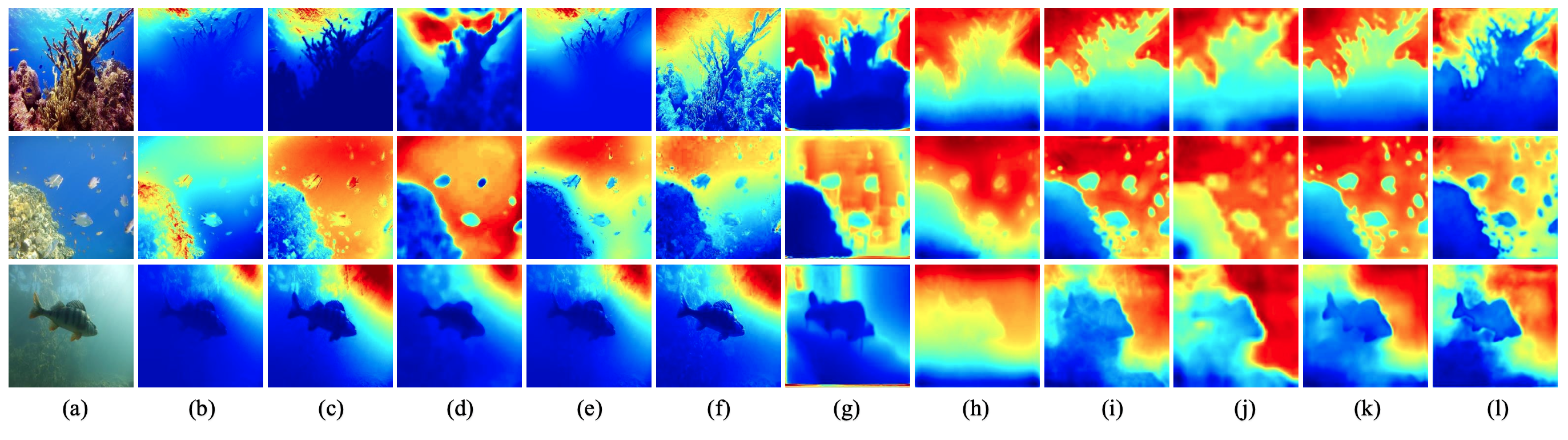

Fig. 3. Visual comparison of depth estimation on images from the test set of the proposed SUIM-SDA dataset. From left to right, (a) the input underwater image, depth estimation results of (b) DCP, (c) UDCP, (d) GDCP, (e) CBF, (f) Hazeline, (g) UW-Net, (h) DIW, (i) PAD-Net, (j) MTAN, (k) MTI-Net and (l) the proposed WsUID-Net are presented.

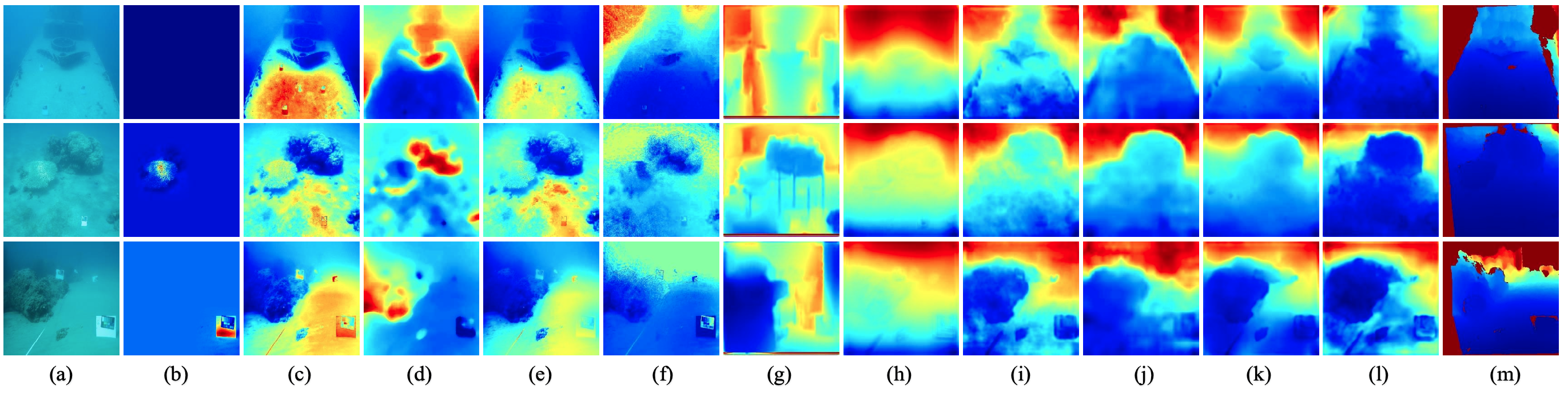

Fig 4. Visual comparison of depth estimation on images from the challenging SQUID dataset. From left to right, (a) the input underwater image, depth estimation results of (b) DCP, (c) UDCP, (d) GDCP, (e) CBF, (f) Hazeline, (g) UW-Net, (h) DIW, (i) PAD-Net, (j) MTAN, (k) MTI-Net and (l) the proposed WsUID-Net, and (m) the ground truth maps are presented.

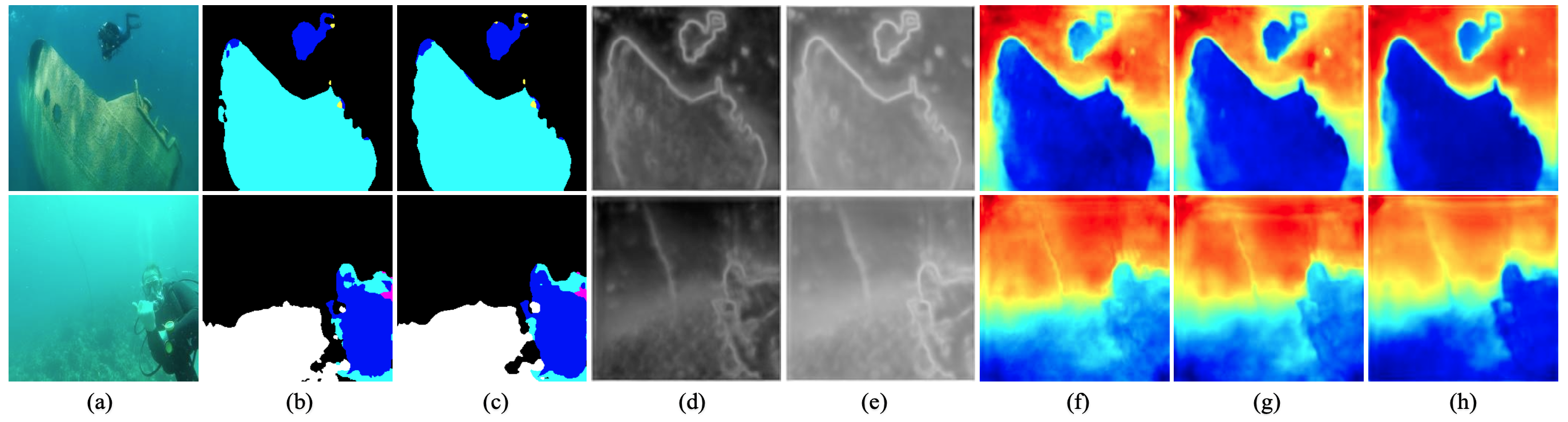

Fig 5. Visualized intermediate results of each branch. (a) are the input underwater images; (b) and (c) are the initial and middle segmentation masks; (d) and (e) are the initial and middle edge maps; (f)-(h) are the initial, middle and final depth maps.

Citation

@article{w2023wsuid-net,

title={Learning Scribbles for Dense Depth:Weakly-Supervised Single Underwater Image Depth Estimation Boosted by Multi-Task Learning},

author={Kunqian Li and Xiya Wang and Wenjie Liu and Guojia Hou and Zhiguo Zhang and Kun Sun},

journal={arXiv preprint arXiv:xxxx.xxxx},

year={2023}

}